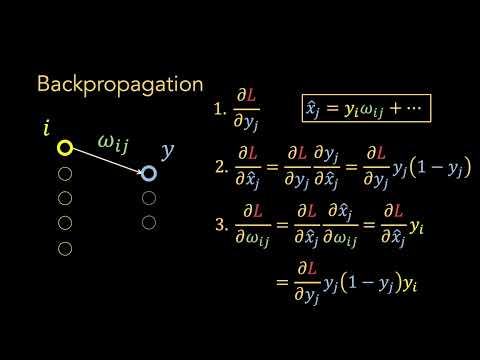

The main ideas behind Backpropagation are super simple, but there are tons of details when it comes time to implementing it. This video shows how to optimize three parameters in a Neural Network simultaneously and introduces some Fancy Notation. NOTE: This StatQuest assumes that you already know the main ideas behind Backpropagation: ...and that also means you should be familiar with... Neural Networks: The Chain Rule: Gradient Descent: LAST NOTE: When I was researching this 'Quest, I found this page by Sebastian Raschka to be helpful: For a complete index of all the StatQuest videos, check out: If you'd like to support StatQuest, please consider... Patreon: ...or... YouTube Membership: ...buying one of my books, a study guide, a t-shirt or hoodie, or a song from the StatQuest store... ...or just donating to StatQuest! Lastly, if you want to keep up with me as I research and create new StatQuests, follow me on twitter: 0:00 Awesome song and introduction 3:01 Derivatives do not change when we optimize multiple parameters 6:28 Fancy Notation 10:51 Derivatives with respect to two different weights 15:02 Gradient Descent for three parameters 17:19 Fancy Gradient Descent Animation #StatQuest #NeuralNetworks #Backpropagation

- 279054Просмотров

- 5 лет назадОпубликованоStatQuest with Josh Starmer

Backpropagation Details Pt. 1: Optimizing 3 parameters simultaneously.

Похожее видео

Популярное

обнаженная

Resila osta

Потерянный снайпер сериал

Universal picturesworkingtitie nanny McPhee

Стражи правосудия 3 сезн

ббурное безрассудство 2

Tantric awakening shaft

Красная гадюка 6 часть

Потерянный снайпер два

Баскервиллей

Чужой район

катя и эфир

Yummy kids

союзмультфильм

Стражи правосудтя

губка боб

ну погоди 18 конец

Mufasa the lion king shaju

Грань правосудия 5

Фильм потерянный снайпер

Грань правосудия 4 серия

лелик и барбарики

Потерянный снайпер 6серия

Resila osta

Потерянный снайпер сериал

Universal picturesworkingtitie nanny McPhee

Стражи правосудия 3 сезн

ббурное безрассудство 2

Tantric awakening shaft

Красная гадюка 6 часть

Потерянный снайпер два

Баскервиллей

Чужой район

катя и эфир

Yummy kids

союзмультфильм

Стражи правосудтя

губка боб

ну погоди 18 конец

Mufasa the lion king shaju

Грань правосудия 5

Фильм потерянный снайпер

Грань правосудия 4 серия

лелик и барбарики

Потерянный снайпер 6серия

Новини