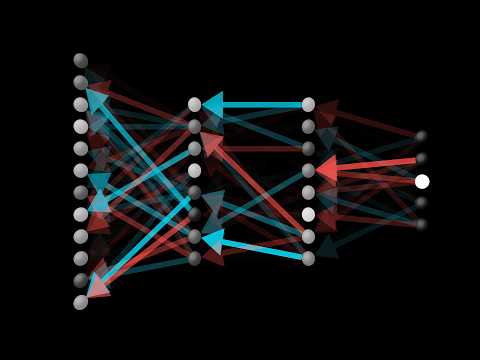

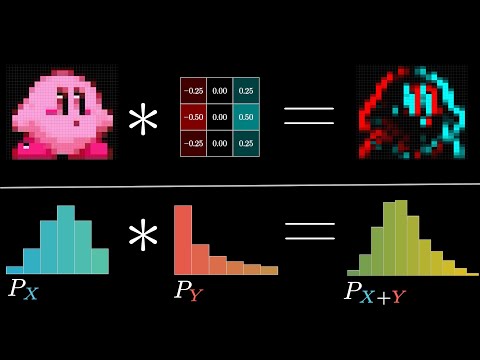

Backpropagation is the method we use to optimize parameters in a Neural Network. The ideas behind backpropagation are quite simple, but there are tons of details. This StatQuest focuses on explaining the main ideas in a way that is easy to understand. NOTE: This StatQuest assumes that you already know the main ideas behind... Neural Networks: The Chain Rule: Gradient Descent: LAST NOTE: When I was researching this 'Quest, I found this page by Sebastian Raschka to be helpful: For a complete index of all the StatQuest videos, check out: If you'd like to support StatQuest, please consider... Patreon: ...or... YouTube Membership: ...buying one of my books, a study guide, a t-shirt or hoodie, or a song from the StatQuest store... ...or just donating to StatQuest! Lastly, if you want to keep up with me as I research and create new StatQuests, follow me on twitter: 0:00 Awesome song and introduction 3:55 Fitting the Neural Network to the data 6:04 The Sum of the Squared Residuals 7:23 Testing different values for a parameter 8:38 Using the Chain Rule to calculate a derivative 13:28 Using Gradient Descent 16:05 Summary #StatQuest #NeuralNetworks #Backpropagation

- 719003Просмотров

- 5 лет назадОпубликованоStatQuest with Josh Starmer

Neural Networks Pt. 2: Backpropagation Main Ideas

Похожее видео

Популярное

Безжалостный гений

VESELAYA-KARUSEL-12

Universal effects g major 4

Бурное,безрассуудствоо

oso

макс и катя

губка боб бабуся

Красная гадюка часть 3

дорожная азбука

tushy

Bing but it’s ruined in sora 2

Денни и Папочка

RafałBrzozowski

золотое дно 2 серия

poland warsaw metro ride from bemowo

ну погоди 17-18 выпуск

дисней добрлас

Гранд правосудия 4

Китай

Красная гадюка 14-20 серии

смешарики чиполлино

16 серия красная гадюка

Пропавший снайпер 7серия

Universal g major 7 in luig Mari

Чудо

VESELAYA-KARUSEL-12

Universal effects g major 4

Бурное,безрассуудствоо

oso

макс и катя

губка боб бабуся

Красная гадюка часть 3

дорожная азбука

tushy

Bing but it’s ruined in sora 2

Денни и Папочка

RafałBrzozowski

золотое дно 2 серия

poland warsaw metro ride from bemowo

ну погоди 17-18 выпуск

дисней добрлас

Гранд правосудия 4

Китай

Красная гадюка 14-20 серии

смешарики чиполлино

16 серия красная гадюка

Пропавший снайпер 7серия

Universal g major 7 in luig Mari

Чудо

Новини