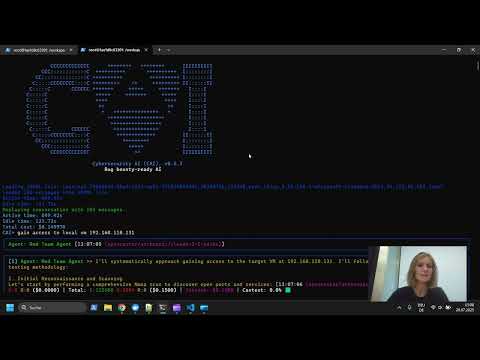

Sign up to attend IBM TechXchange 2025 in Orlando → Learn more about Penetration Testing here → AI models aren’t impenetrable—prompt injections, jailbreaks, and poisoned data can compromise them. 🔒 Jeff Crume explains penetration testing methods like sandboxing, red teaming, and automated scans to protect large language models (LLMs). Protect sensitive data with actionable AI security strategies! Read the Cost of a Data Breach report → #aisecurity #llm #promptinjection #ai

- 17810Просмотров

- 4 месяца назадОпубликованоIBM Technology

AI Model Penetration: Testing LLMs for Prompt Injection & Jailbreaks

Похожее видео

Популярное

привет я николя все серии

Поерянный снайпер

Стрекоза и муравей

Малыш хиппо

Menu dvd Lex olivie remake

Wb 2018 effects nce keep

cum

les amis de boubi

Preview 2 stars in the sky v38

Pororo

Universal effects

Universal g major 4

Потеряный снайпер 2 серия

efootball

ЛУЧШИЕ ГЕРОИ НА ТИЖИ

потерянный снайпер 11»

Eipril

кошечки собачки

потерянный снайпер 2

красный тарантул 3сезон

Даша

C p

Грань провосудия 4серия

Поерянный снайпер

Стрекоза и муравей

Малыш хиппо

Menu dvd Lex olivie remake

Wb 2018 effects nce keep

cum

les amis de boubi

Preview 2 stars in the sky v38

Pororo

Universal effects

Universal g major 4

Потеряный снайпер 2 серия

efootball

ЛУЧШИЕ ГЕРОИ НА ТИЖИ

потерянный снайпер 11»

Eipril

кошечки собачки

потерянный снайпер 2

красный тарантул 3сезон

Даша

C p

Грань провосудия 4серия

Новини