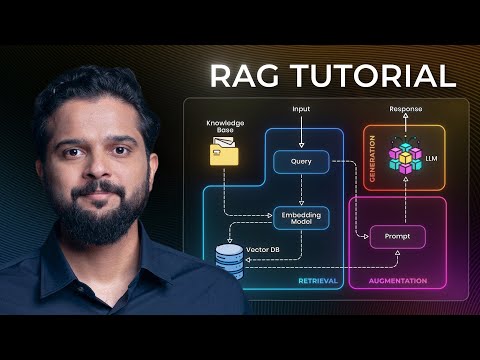

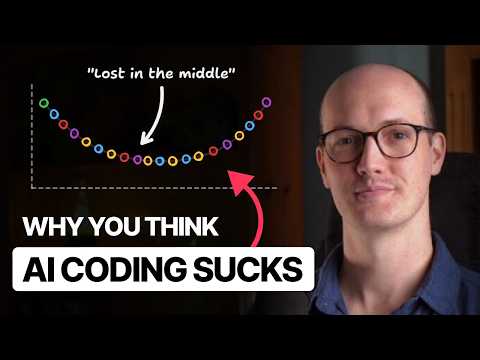

🧪Hands-On Labs for Free - LLMs don’t truly remember—most “memory” is just the context window, which varies by model and caps how much text fits in one turn, so this video shows how to engineer context and add RAG to simulate durable, grounded recall. 🧠 What You'll Learn: ✅ Context window basics (2K to 1M tokens) ✅ Context engineering techniques ✅ RAG implementation with OpenAI API ✅ Demo using Free Labs ⏰ Timestamps: 00:00 - Why is Memory a big limitation in LLMs? 00:26 - Understanding context windows in LLMs 01:16 - How to Choose the right model for your task? 02:00 - Context engineering and the apple farm example 03:11 - How RAG works with vector databases 03:57 - One Drawback in RAG 04:30 - Lab introduction and setup 05:08 - Lab Demo - Setting Up the Environment 05:32 - Lab Demo - Context Window (The Pi Digit Problem) 06:14 - Lab Demo - Context Engineering 07:22 - Lab Demo - Memory Management 08:19 - Lab Demo - Simple RAG System 09:52 - Key takeaways and best practices 🧪 Hands-On Labs for Free - 🔔 SUBSCRIBE for cutting-edge AI tutorials that actually matter! Check out our learning paths at KodeKloud to get started: ▶️ DevOps Learning Path: ▶️ Cloud: ▶️ Linux: ▶️ Kubernetes: ▶️ Docker: ▶️ Infrastructure as Code(IAC): ▶️ Programming: #LLM #ContextWindow #LargeLanguageModels #RAG #RetrievalAugmentedGeneration #VectorDatabase #LLMMemory #ContextEngineering #PromptEngineering #TokenLimits #GPT4 #ClaudeAI #GeminiPro #OpenAI #MachineLearning #NLP #AITutorial #AIMemory #LLMLimitations #AIEducation #DeepLearning #TransformerModels #AIContext #LongTermMemory #ShortTermMemory #VectorSearch #AIOptimization #LLMTraining #MemoryManagement #AILabs #HandsOnAI #PracticalAI #AIImplementation #LLMDevelopment #aitools #kodekloud For more updates on courses and tips, follow us on: 🌐 Website: 🌐 LinkedIn: 🌐 Twitter: 🌐 Facebook: 🌐 Instagram: 🌐 Blog:

- 9114Просмотров

- 3 месяца назадОпубликованоKodeKloud

Why LLMs Forget—and How RAG + Context Engineering Fix It (Free Labs).

Похожее видео

Популярное

Темное наследие

Малыш хиппо

випуск

детский сад

кофико

Потерянный снайпер часть 2

Красная гадюка4

Красная гадюка часть 3

мода из комода

Писик Лупидиду мультфильм

Alizeh agnihotri

24-вк

Поточний снайпер 2 часть

Classic caliou misbehaves on a road trip

ПОТЕРЯННЫЙ СНАЙПЕР 5 сери

веселая-карусел-24

Bing gets good

Красная гадюка 6

Стражи правосудия 3 часть

Две сестры / Дві сестри

Preview 2 stars in the sky v38

Пороро акулы

Малышарики

ПОТЕРЯННЫЙ СНАЙПЕР 3

сакс игрушки

Малыш хиппо

випуск

детский сад

кофико

Потерянный снайпер часть 2

Красная гадюка4

Красная гадюка часть 3

мода из комода

Писик Лупидиду мультфильм

Alizeh agnihotri

24-вк

Поточний снайпер 2 часть

Classic caliou misbehaves on a road trip

ПОТЕРЯННЫЙ СНАЙПЕР 5 сери

веселая-карусел-24

Bing gets good

Красная гадюка 6

Стражи правосудия 3 часть

Две сестры / Дві сестри

Preview 2 stars in the sky v38

Пороро акулы

Малышарики

ПОТЕРЯННЫЙ СНАЙПЕР 3

сакс игрушки

Новини