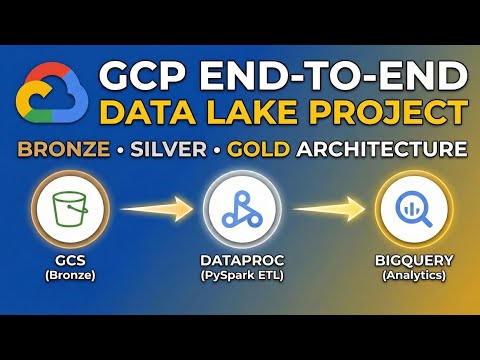

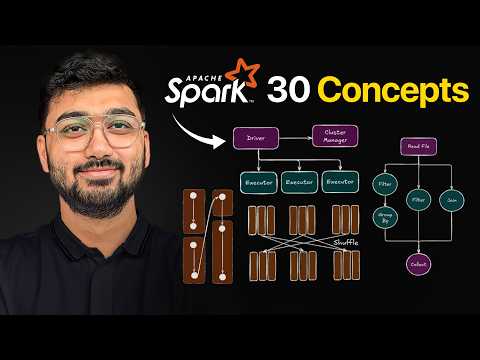

Azure End-To-End Data Engineering Project | ADF • Databricks • Synapse • PySpark 🚀 In this hands-on project, I built a complete Azure Data Engineering pipeline from scratch using Azure Data Factory, Azure Data Lake Gen2, Azure Databricks, PySpark, and Azure Synapse Analytics. This project follows the Bronze, Silver, and Gold architecture and shows how raw data moves through ingestion, transformation, and analytical layers in a real enterprise system. 🌐⚡ What I Worked On: Data Architecture and Pipeline Design 🏗️ Designed the full end-to-end architecture, including Bronze, Silver, and Gold layers, API ingestion, and Spark-based transformations. Data Ingestion with Azure Data Factory 🔄 Created ingestion pipelines to pull raw data from an API and store it in ADLS Bronze. Implemented pipelines, triggers, and real-time scenarios using ADF. Azure Data Lake and Resource Setup 🗂️ Configured storage accounts, created containers, built folder structures, and set up service principals for secure access. Transformations with Azure Databricks and PySpark ⚙️✨ Cleaned and transformed large datasets using PySpark. Built optimized Delta tables in the Silver layer. Performed advanced transformations for analytics in the Gold layer. Big Data Analytics and Delta Lake 📊 Worked with Apache Spark to run analytics, generate insights, and handle big data efficiently. Azure Synapse Analytics Warehouse 💡 Created external tables using Openrowset, built the warehouse layer, and connected Synapse to Power BI for dashboard-ready analytics. End-to-End Architecture Flow: API Source → ADF Pipelines → ADLS Bronze → Databricks Silver and Gold → Synapse Warehouse → Power BI Insights 📈 This project gave me real-world experience across the Azure data ecosystem and strengthened my skills in building scalable, cloud-ready data engineering pipelines. 🔥💼

- 12Просмотров

- 1 неделя назадОпубликованоHack Nado

Azure End-To-End Data Engineering Project | ADF • Databricks • Synapse • PySpark

Похожее видео

Популярное

6 часть красная гадюка

ПОТЕРЯННЫЙ СНАЙПЕР 2 серия

снег

women relax at pool

красная гадюка12серия

ПЧЁЛКА МАЙЯ

Потеряний снайпер 2

Wb 2011 effects

Красная гадюка 11 серия

СКАЗКИ ЛЮПИНА

Семья от а до Я

Сериал я жив

МИСТЕР МЭН

Я вспоминаю

Потерянный снайпер 6серия

Тверская 2 сезон

Ох и ах

Бурное безрассудно 2 часть

Смешарики

Classic caliou misbehaves onthe t

Wb 2022 effects inspired by preview 2

Дора

УМ. БЕЛЫЙ ДЕЛЬФИН

ПОТЕРЯННЫЙ СНАЙПЕР 2 серия

снег

women relax at pool

красная гадюка12серия

ПЧЁЛКА МАЙЯ

Потеряний снайпер 2

Wb 2011 effects

Красная гадюка 11 серия

СКАЗКИ ЛЮПИНА

Семья от а до Я

Сериал я жив

МИСТЕР МЭН

Я вспоминаю

Потерянный снайпер 6серия

Тверская 2 сезон

Ох и ах

Бурное безрассудно 2 часть

Смешарики

Classic caliou misbehaves onthe t

Wb 2022 effects inspired by preview 2

Дора

УМ. БЕЛЫЙ ДЕЛЬФИН

Новини