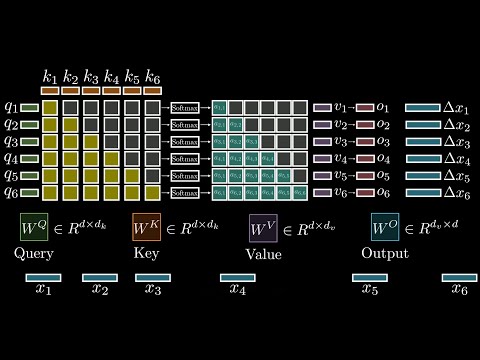

This video covers 3 of the top papers at NeurIPS, 2025. All three of the papers covered won a Best Paper Award, and all three topics could have a direct impact on the AI world right away, so that’s how they were selected. The papers covered are: #1 1000 Layer Networks for Self-Supervised RL: Scaling Depth Can Enable New Goal-Reaching Capabilities This describes an approach for using a deep network for reinforcement learning problems, resulting in a 2 to 50x improvement in performance as compared to current methods. #2 Gated Attention for Large Language Models: Non-linearity, Sparsity, and Attention-Sink-Free This describes a simple architectural change to the design of language models that consistently improved model training, scalability and performance on about 30 experiments, including experiments involving deep models and transformer models that used trillions of tokens. #3 Artificial Hivemind: The Open-Ended Homogeneity of Language Models (and Beyond) This is the first time that researchers have systematically studied the behavior of language models when presented with open-ended requests, where there’s no ground truth answer. The main insight from this study is that LLMs now exhibit a tendency to converge on the same ideas, even when a vast array of different creative solutions could be imagined. #NeurIPS2025 #AIresearch #MachineLearning

- 14733Просмотров

- 1 неделя назадОпубликованоAI Master Group

NeurIPS 2025: Top 3 Highlights

Похожее видео

Популярное

малыш вилли

n-31-2000

Ох и ах

Big cats size comparison

Патеринний снайпер.4серия

женитьба бальзаминова

Червона гадюка 7серія

Безжалостный гений 3

Valu temporada

bungalow colony

Красная гадюка часть 4

Красная гадюка 5 серия

Обриси

Classic caillou gets grounded on thanksgiving

Обманшики

Семья от а до Я

Не дозволяй йому

Красная гадюка 17 серия

Красная гадюка 5часть

хулиган и пай девочка

МАЛЕНЬКАЯ ПРИНЦЕССА

Городской снайпер 3

n-31-2000

Ох и ах

Big cats size comparison

Патеринний снайпер.4серия

женитьба бальзаминова

Червона гадюка 7серія

Безжалостный гений 3

Valu temporada

bungalow colony

Красная гадюка часть 4

Красная гадюка 5 серия

Обриси

Classic caillou gets grounded on thanksgiving

Обманшики

Семья от а до Я

Не дозволяй йому

Красная гадюка 17 серия

Красная гадюка 5часть

хулиган и пай девочка

МАЛЕНЬКАЯ ПРИНЦЕССА

Городской снайпер 3

Новини