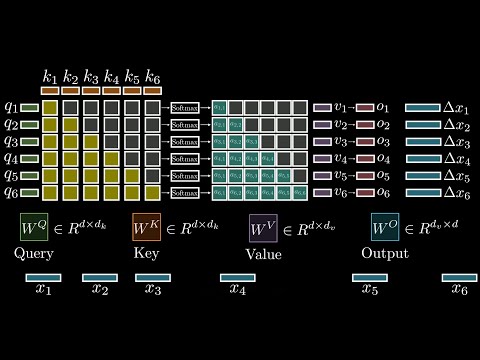

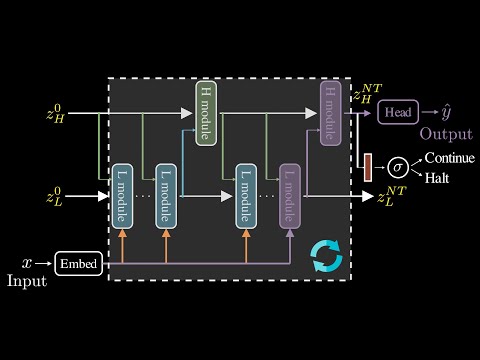

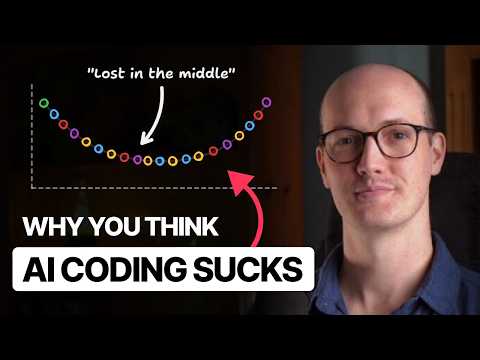

📚 Free resources (reading list + visuals): 📃 HRM paper: ▶️ Yacine's YouTube channel: @deeplearningexplained In this video, we dive into the Hierarchical Reasoning Model (HRM), a new architecture from Sapient Intelligence that challenges scaling as the only way to advance AI. With only 27M parameters, 1000 training examples, and no pretraining, HRM still manages to place on the notoriously difficult ARC-AGI leaderboard, right next to models from OpenAI and Anthropic. Together with Yacine Mahdid (neuroscience researcher & ML practitioner), we’ll explore: • Why vanilla Transformers plateau on tasks like Sudoku and Maze solving • How latent recurrence and hierarchical loops give HRM more reasoning depth • The neuroscience inspiration (theta–gamma coupling in the hippocampus 🧠) • HRM’s controversial evaluation on ARC-AGI: was it a breakthrough or bending the rules? • What this means for the future of reasoning in AI models Timestamps: 00:00 Introducing HRM 01:23 Why Sudoku breaks Transformers 03:07 Recurrence via Chain-of-Thought 04:22 HRM: bird's eye view 06:30 Latent recurrence 08:23 The neuroscience backing 11:43 The H and L modules 12:32 Backprop-through-time approximation 13:48 The outer loop 19:31 Training data augmentation 22:59 Evaluation on Sudoku 24:07 Evaluation on ARC-AGI

- 23076Просмотров

- 3 месяца назадОпубликованоJulia Turc

Hierarchical Reasoning Model: Substance or Hype?

Похожее видео

Популярное

Не дозволяй йому

Дорлга гнева

Потеряный снайпер 3 серия

Classic caliou gets grounded on thanksgiving

Dora the explorer

женитьба бальзаминова

moden toking

ПЕРСИ И ДРУЗЬЯ

Бурное безрассудство 1

5 серия красная гадюка

Vpered diego vpered

Городской снайпер 2 серия

Потерянный снайпер серия 2

Preview 2 stars in the sky v38

undefined

Грань провосудия 4серия

потеренный снайпер 2

Красная кадыка 2

Universal 2013 in not scary I. G major 4

Lying ear picking

Pushpa 2 rashmika mandanna

потерянный снайпер

Новые фильмы

Дорогу Нодди

Щенячий патруль

Дорлга гнева

Потеряный снайпер 3 серия

Classic caliou gets grounded on thanksgiving

Dora the explorer

женитьба бальзаминова

moden toking

ПЕРСИ И ДРУЗЬЯ

Бурное безрассудство 1

5 серия красная гадюка

Vpered diego vpered

Городской снайпер 2 серия

Потерянный снайпер серия 2

Preview 2 stars in the sky v38

undefined

Грань провосудия 4серия

потеренный снайпер 2

Красная кадыка 2

Universal 2013 in not scary I. G major 4

Lying ear picking

Pushpa 2 rashmika mandanna

потерянный снайпер

Новые фильмы

Дорогу Нодди

Щенячий патруль

Новини