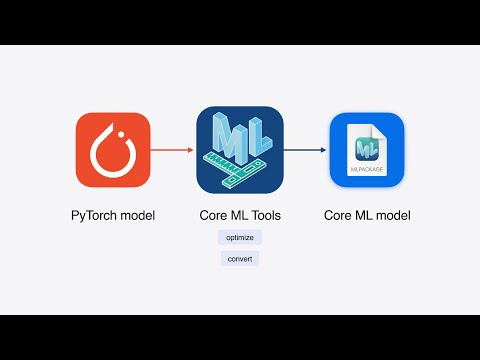

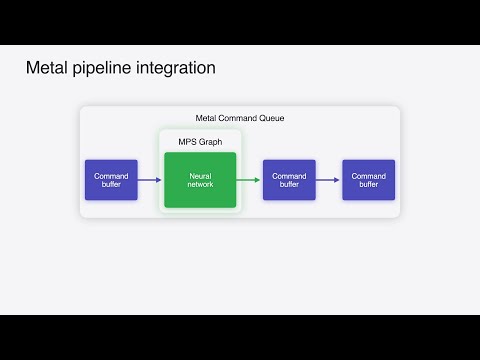

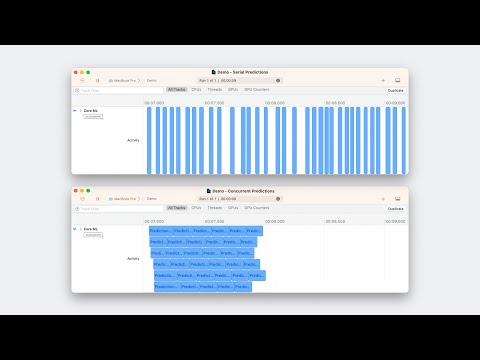

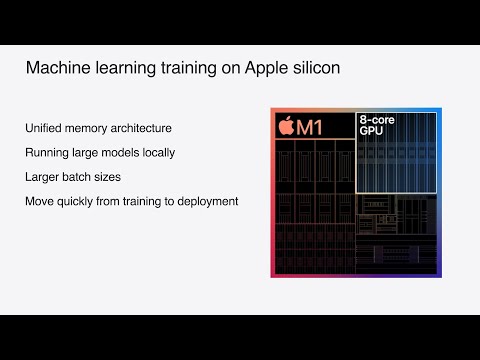

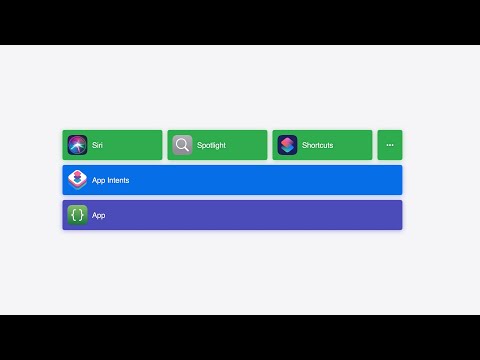

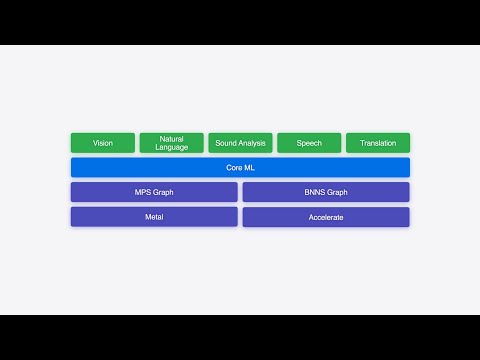

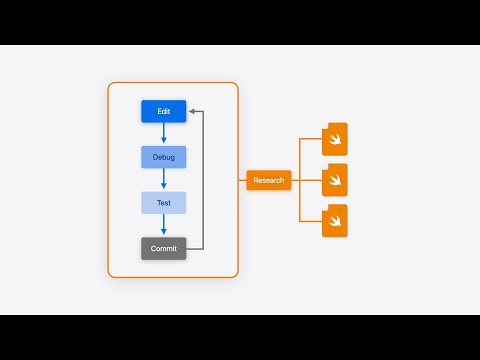

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences. Discuss this video on the Apple Developer Forums: Explore related documentation, sample code, and more: Stable Diffusion with Core ML on Apple Silicon: Core ML: Introducing Core ML: Improve Core ML integration with async prediction: Use Core ML Tools for machine learning model compression: Convert PyTorch models to Core ML: 00:00 - Introduction 01:07 - Integration 03:29 - MLTensor 08:30 - Models with state 12:33 - Multifunction models 15:27 - Performance tools More Apple Developer resources: Video sessions: Documentation: Forums: App:

- 14474Просмотров

- 1 год назадОпубликованоApple Developer

WWDC24: Deploy machine learning and AI models on-device with Core ML | Apple

Похожее видео

Популярное

hentai

бен 10

Грань правосудия 2

poterianij snaiper 2 seria

массаж поджелудочной

ЕДУНОВ ВИДИО

ВЕЛОСПОРТ ЮРИЙ ПЕТРОВ .

Sexy girl]

Pororo russian

Безжалостный гений часть5

томас и его друзья елка

союзмультфильм игрушки

ббурное безрассудство 2

ангелина

Universal 1997 2012 g. Major 4

Сэмми и друзья

RafałBrzozowski

Are you sure universal 2010

СКАЗКИ ЛЮПИНА

Tutti fruti kids song boo boo song

Красная гадюка 16 серия

Красная гадюка 2

Чужой район

бен 10

Грань правосудия 2

poterianij snaiper 2 seria

массаж поджелудочной

ЕДУНОВ ВИДИО

ВЕЛОСПОРТ ЮРИЙ ПЕТРОВ .

Sexy girl]

Pororo russian

Безжалостный гений часть5

томас и его друзья елка

союзмультфильм игрушки

ббурное безрассудство 2

ангелина

Universal 1997 2012 g. Major 4

Сэмми и друзья

RafałBrzozowski

Are you sure universal 2010

СКАЗКИ ЛЮПИНА

Tutti fruti kids song boo boo song

Красная гадюка 16 серия

Красная гадюка 2

Чужой район

Новини