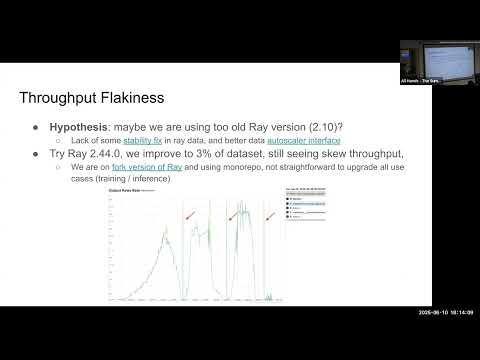

Struggling to scale your Large Language Model (LLM) batch inference? Learn how Ray Data and vLLM can unlock high throughput and cost-effective processing. This #InfoQ video dives deep into the challenges of LLM batch inference and presents a powerful solution using Ray Data and vLLM. Discover how to leverage heterogeneous computing, ensure reliability with fault tolerance, and optimize your pipeline for maximum efficiency. Explore real-world case studies and learn how to achieve significant cost reduction and performance gains. 🔗 Transcript available on InfoQ: 👍 Like and subscribe for more content on AI and LLM optimization! What are your biggest challenges with LLM batch inference? Comment below! 👇 #LLMs #BatchInference #RayData #vLLM #AI

- 2655Просмотров

- 9 месяцев назадОпубликованоInfoQ

Scaling LLM Batch Inference: Ray Data & vLLM for High Throughput

Похожее видео

Популярное

Walt Disney pictures 2011 in g major effects

Патеринний снайпер.4серия

Стражи правосудия 5

Красная гадюка 5-8 серия

лёлик и барбарики диск

Tutti fruti kids song boo boo song

потерянный сгайпер

Classic caliou misbehaves on a road

Красная гадюка 5часть

ПЕРСИ И ЕГО ДРУЗЬЯ

мода из комода

мальчики

Потеряный снайпер 2

Animal racing lion game

Дельфин 4

веселая-карусел-24

Wonderpt

Indian idol season 15 jai jai shiv shankar

потеряный снайпер 3 серия

потеряный снайпер 2 часть

agustin marin i don

5 серия красная гадюка

союзмультфильм игрушки

взлом карусели

Preview Disney 2006 effects

Патеринний снайпер.4серия

Стражи правосудия 5

Красная гадюка 5-8 серия

лёлик и барбарики диск

Tutti fruti kids song boo boo song

потерянный сгайпер

Classic caliou misbehaves on a road

Красная гадюка 5часть

ПЕРСИ И ЕГО ДРУЗЬЯ

мода из комода

мальчики

Потеряный снайпер 2

Animal racing lion game

Дельфин 4

веселая-карусел-24

Wonderpt

Indian idol season 15 jai jai shiv shankar

потеряный снайпер 3 серия

потеряный снайпер 2 часть

agustin marin i don

5 серия красная гадюка

союзмультфильм игрушки

взлом карусели

Preview Disney 2006 effects

Новини