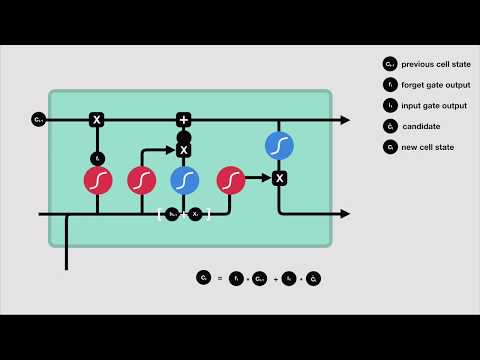

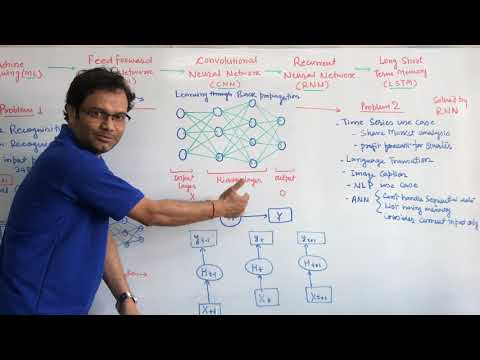

LSTM Recurrent Neural Network is a special version of the RNN model. It stands for Long Short-Term Memory. The simple RNN has a problem that it cannot remember the context in a long sentence because it quickly loses information. And that is why Simple RNN has an only short-term memory. LSTM has both long-term and short-term memory. It can store any contextual information for a long time. LSTM has 2 internal states. 1.) Memory Cell State which acts as a long term memory 2.) Hidden State which acts as a short term memory The main working components in LSTM are gates. There are 3 types of gates in LSTM: 1.) Forget Gate 2.) Input Gate 3.) Output Gate In the video, we have understood LSTM Recurrent Neural Network in detail. ➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖ Timestamps: 0:00 Intro 1:36 Problem with RNN 5:30 LSTM Overview 7:42 Forget Gate 10:39 Input Gate 13:39 Equations and other details 16:41 Summary of LSTM 18:23 LSTM through different times 19:01 End ➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖ 📕📄 Quiz: ➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖ Follow my entire playlist on Recurrent Neural Network (RNN) : 📕 RNN Playlist: ➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖ ✔ CNN Playlist: ✔ Complete Neural Network: ✔ Complete Logistic Regression Playlist: ✔ Complete Linear Regression Playlist: ➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖ If you want to ride on the Lane of Machine Learning, then Subscribe ▶ to my channel here:

- 120139Просмотров

- 3 года назадОпубликованоLearn With Jay

LSTM Recurrent Neural Network (RNN) | Explained in Detail

Похожее видео

Популярное

Бурное безрассудство2

малыш вилли

хулиган и пай девочка

игра снайпера 2 серия

Крізь помилки минулого

健屋

Trade scam script

Boo boo song hurt song tai tai kids

Потерянный снайпер 8серия

moden toking

Свинка пеппа

Spongebob

Стражи правосудия

6 серия

МАЛЕНЬКАЯ ПРИНЦЕССА

لخت

Valu temporada

Бурное безрассудство 1

Vpered diego vpered

Кормление грудью

ПОТЕРЯННЫЙ СНАЙПЕР 3

Городской снайпер 2

веселая-карусел-14

малыш вилли

хулиган и пай девочка

игра снайпера 2 серия

Крізь помилки минулого

健屋

Trade scam script

Boo boo song hurt song tai tai kids

Потерянный снайпер 8серия

moden toking

Свинка пеппа

Spongebob

Стражи правосудия

6 серия

МАЛЕНЬКАЯ ПРИНЦЕССА

لخت

Valu temporada

Бурное безрассудство 1

Vpered diego vpered

Кормление грудью

ПОТЕРЯННЫЙ СНАЙПЕР 3

Городской снайпер 2

веселая-карусел-14

Новини