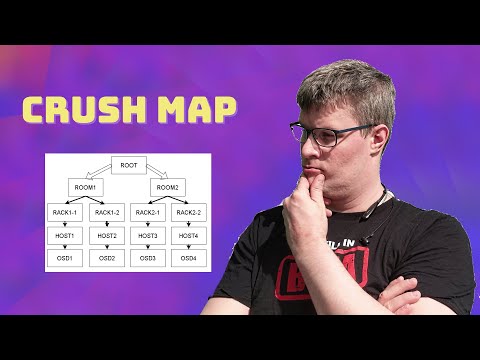

Understand Ceph's revolutionary architecture! Deep dive into RADOS, CRUSH algorithm, and core components that make Ceph self-healing and infinitely scalable. ⏱️ TIMESTAMPS: 0:00 - Introduction to Ceph Architecture 1:30 - The RADOS Foundation 4:15 - Core Components Overview 6:30 - Monitors (MON) - The Cluster Brain 9:45 - OSDs - Storage Workhorses 13:20 - Managers (MGR) - Metrics & Management 16:00 - Metadata Servers (MDS) - File Intelligence 18:30 - CRUSH Algorithm Explained 22:00 - Architecture Summary 🎯 KEY CONCEPTS COVERED: RADOS (Reliable Autonomic Distributed Object Store) → Self-healing mechanisms → Self-managing capabilities → No single point of failure → Object storage foundation MONITORS (MON) → Maintain cluster maps → Quorum and consensus (Paxos) → Cluster state coordination → Odd number requirement (3, 5, 7) OSDs (Object Storage Devices) → Store actual data → Handle replication → Recovery and rebalancing → Direct client communication → BlueStore backend MANAGERS (MGR) → Collect cluster statistics → Dashboard and REST API → Plugin architecture → Performance monitoring METADATA SERVERS (MDS) → CephFS metadata management → POSIX compliance → Active/Standby configuration → Directory sharding CRUSH ALGORITHM → Deterministic placement → Pseudo-random distribution → Failure domain awareness → No lookup tables needed CLUSTER MAPS (The 5 Maps) 1. Monitor Map (MON topology) 2. OSD Map (OSD status & pools) 3. PG Map (placement group states) 4. CRUSH Map (cluster topology) 5. MDS Map (metadata server states) 💡 ARCHITECTURAL PRINCIPLES: → No metadata bottlenecks → Clients calculate data location → Direct OSD access (no proxies) → Scalable to thousands of nodes 📊 DATA PATH EXPLAINED: 1. Client calculates PG using CRUSH 2. Client contacts primary OSD 3. Primary OSD replicates to secondaries 4. Acknowledgment sent back to client 🔍 SELF-HEALING MECHANISMS: → Automatic OSD failure detection → Data re-replication → Background scrubbing → Integrity verification 📚 COMPLETE ARCHITECTURE COURSE: Master every component with hands-on labs: 👉 Course Includes: ✅ Architecture theory + diagrams ✅ 25 hands-on labs ✅ Component deployment ✅ CRUSH map customization ✅ Troubleshooting guides 🔗 RELATED VIDEOS: - CRUSH Map Deep Dive - BlueStore Explained - Placement Groups (PG) Masterclass - Ceph Data Flow Visualization 📖 FURTHER READING: → Sage Weil's Original Thesis → Official Ceph Architecture Guide → CRUSH Algorithm Paper 📧 QUESTIONS? Drop them in comments! 🌐 #CephArchitecture #RADOS #CRUSHAlgorithm #CephComponents #DistributedStorage #StorageArchitecture #CephTutorial #CephDeepDive #MON #OSD #MGR #MDS #CephInternals #StorageEngineering #CephSquid #SoftwareDefinedStorage 🔔 Subscribe for more deep technical content!

- 208Просмотров

- 2 месяца назадОпубликованоEC INTELLIGENCE

Ceph Architecture Deep Dive: RADOS, CRUSH Algorithm & Core Components Explained (2025)

Похожее видео

Популярное

navy boat crew

пес

неумолимый 3

Барбоскины Лучший день

мода из комода

klaskyklaskyklaskyklasky remastered joey 2 do go

Городскои снаипер 8

красная гадюка 17-20 серия

потерянный снайпер 11»

ШПИОН И ШЛЯПЫ КОРОЛЕВЫ

красный тарантул 3сезон

хулиган и пай девочка

Фильм грань правосудия 2

Красная гадюка все серии

ВИКТОРИНА ЗАКА

Rosie Misbehaves on a road trip

dora dasha

красный тигр-1

Волчий берег11серии

Грань правосудия 3

Потерянный снайпер часть 6

детский сад выпускной

городской снайпер 8 серия

Фивел

пес

неумолимый 3

Барбоскины Лучший день

мода из комода

klaskyklaskyklaskyklasky remastered joey 2 do go

Городскои снаипер 8

красная гадюка 17-20 серия

потерянный снайпер 11»

ШПИОН И ШЛЯПЫ КОРОЛЕВЫ

красный тарантул 3сезон

хулиган и пай девочка

Фильм грань правосудия 2

Красная гадюка все серии

ВИКТОРИНА ЗАКА

Rosie Misbehaves on a road trip

dora dasha

красный тигр-1

Волчий берег11серии

Грань правосудия 3

Потерянный снайпер часть 6

детский сад выпускной

городской снайпер 8 серия

Фивел

Новини