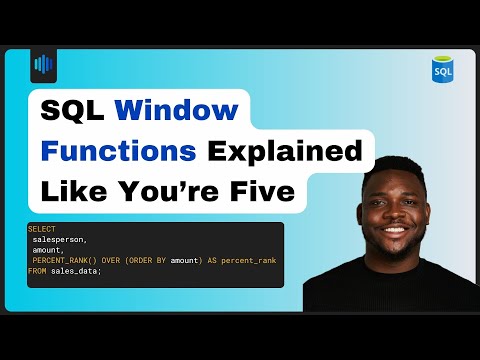

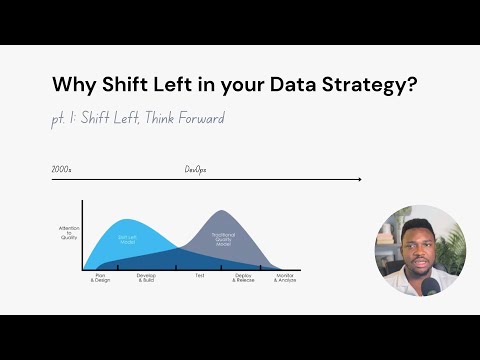

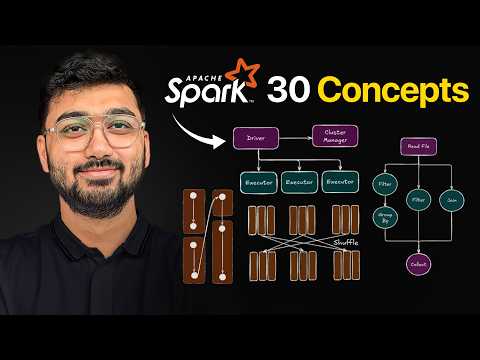

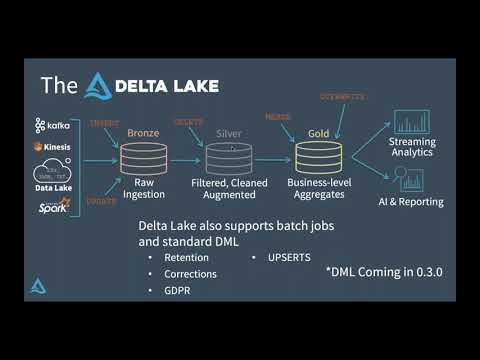

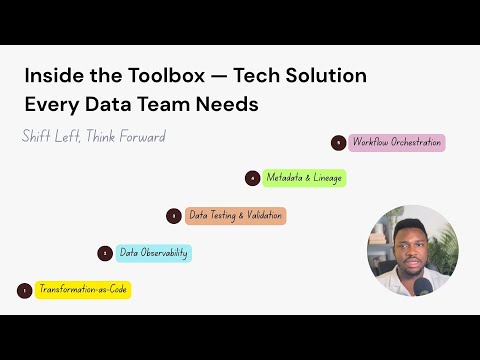

In this video, I walk you through a full-scale data pipeline for processing and analyzing news articles using the modern medallion architecture (Bronze → Silver → Gold). The pipeline is built on Databricks and utilizes PySpark, Delta Lake, and Hive Metastore, with integrated sentiment analysis using TextBlob and robust data quality validation mechanisms. 🔧 Technologies Used: Apache Spark (PySpark) Delta Lake (ACID Transactions) Azure Data Lake Gen2 (Storage) Hive Metastore / Unity Catalog (Metadata Management) TextBlob (NLP Sentiment Analysis) Databricks (ETL Orchestration) 📌 What You'll Learn: How to ingest data from APIs and store in Delta format Dynamic data quality checks and quarantining bad records Enriching data with NLP sentiment scores Building star-schema data models with fact/dim tables Writing clean data to Hive and exposing it for BI 📁 GitHub Repo: 👉 📌 Referenced Video - How to Provision the Medallion Architecture on Azure ADLS using Terraform: - Medallion Architecture Explained: From Raw Data to Business Insights: - How to Create Azure Key Vault and Connect with Databricks: - How to Design a Data Model Using Python and SQLite: - How to Connect to Databricks from PowerBI: ----- 🔥 Don't forget to Like, Comment, and Subscribe for more data engineering content!#dataengineering #Databricks #DeltaLake #ApacheSpark #PySpark #NLP #SentimentAnalysis #BigData #ETL #Hive #Lakehouse #MedallionArchitecture

- 3950Просмотров

- 7 месяцев назадОпубликованоThe Data Signal

📰 End-to-End News Data Pipeline | Databricks, PySpark, Delta Lake, Hive, and Sentiment Analysis

Похожее видео

Популярное

Красная гадюка 5

сваты сезон 1 все серии

agustin marin i don

Потерянный снайпер 7 серия

Безжалостный гений часть5

Бурное безрассудство2

Грань правосудия 2

asmr tongue rubing

привет я николя все серии

efootball

Он іздевался над женой

Новые фильмы

ЧУПИ В ШКОЛЕ

Коля оля и архимед

машенька

Rayton M01

красная гадюка 3 серия

dora dasha

Kion lion king trailer 2026

Wb effects not scary

Безжалостный гений

красный тарантул

чиполлино

Фивел

navy boat crew

сваты сезон 1 все серии

agustin marin i don

Потерянный снайпер 7 серия

Безжалостный гений часть5

Бурное безрассудство2

Грань правосудия 2

asmr tongue rubing

привет я николя все серии

efootball

Он іздевался над женой

Новые фильмы

ЧУПИ В ШКОЛЕ

Коля оля и архимед

машенька

Rayton M01

красная гадюка 3 серия

dora dasha

Kion lion king trailer 2026

Wb effects not scary

Безжалостный гений

красный тарантул

чиполлино

Фивел

navy boat crew

Новини