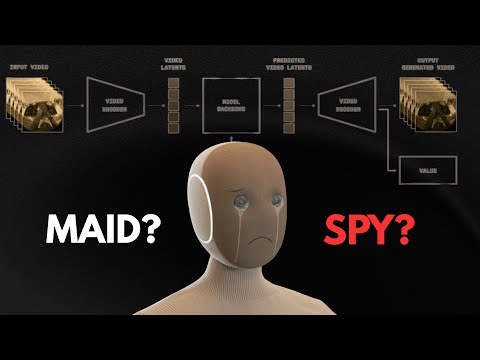

I built a model that predicts YouTube views just from a video’s title + thumbnail. This project actually started as a generative idea: I scraped thousands of video descriptions and tried summarizing them with a local LLM, but the process was painfully slow. So I pivoted to something cleaner and more scalable—using BERT for titles and CLIP for thumbnails. I ended up scraping 19,786 real YouTube title–thumbnail pairs with Selenium, extracted embeddings from both models, and trained a regression model to estimate how many views each video would get. In this video, I cover: Why I abandoned the original generative plan How Selenium scraped nearly 20k samples How I combined BERT (text) + CLIP (image) What matters more: titles or thumbnails What this dataset teaches about social proof If you’re into ML, multimodal models, computer vision, artifiical intelligence or YouTube analytics, this will give you a real-world example of building a practical prediction pipeline. Tools: Python, PyTorch, Selenium, BERT, CLIP, local LLMs #artificialintelligencemodel #aimodel #machinelearning #tech #devlog #datascience #computervision #python #openai 00:00 Intro 00:50 Project Pipeline 02:08 BERT 02:55 Change of Project Pipeline 03:08 CLIP 04:26 Trying the model with REAL videos

- 465Просмотров

- 1 неделя назадОпубликованоyesotech

I trained an AI for virality...and it worked

Похожее видео

Популярное

сериал

Universal picturesworkingtitie nanny McPhee

операция реклама

dora dasha

Красная гадюка 7

Лихие 2

крот и автомобильчик

щенячий патруль реклама

Halloween boo boo s

смешарики спорт

Universal effects not scary

Красная гадюка 4 серия

Смешари Пин код

Красная гадюка 17 серия

алиса в стране чудеса

Лимпопо

Потерянный снайпер 4 серия

健屋

Барбоскины Лучший день

navy boat crew

Красная гадюка5

Никелодеон

ТИМА И ТОМА

Walt Disney pictures 2011 in g major effects

Малыш хиппо

Universal picturesworkingtitie nanny McPhee

операция реклама

dora dasha

Красная гадюка 7

Лихие 2

крот и автомобильчик

щенячий патруль реклама

Halloween boo boo s

смешарики спорт

Universal effects not scary

Красная гадюка 4 серия

Смешари Пин код

Красная гадюка 17 серия

алиса в стране чудеса

Лимпопо

Потерянный снайпер 4 серия

健屋

Барбоскины Лучший день

navy boat crew

Красная гадюка5

Никелодеон

ТИМА И ТОМА

Walt Disney pictures 2011 in g major effects

Малыш хиппо

Новини