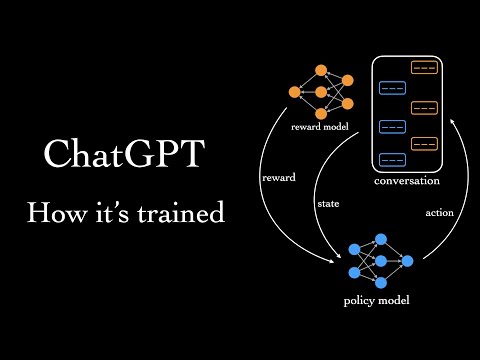

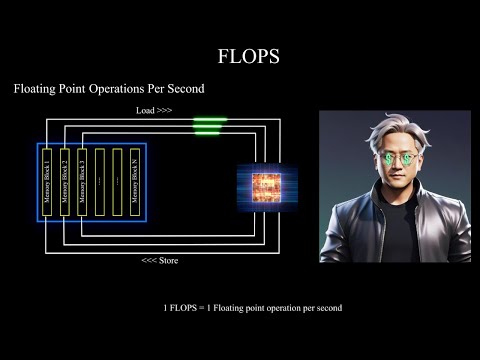

Ever wondered how massive AI models like GPT are actually trained?While everyone's talking about ChatGPT, Claude, and Gemini's capabilities, there's a revolutionary technology behind the scenes that nobody's talking about - Fully Sharded Data Parallel (FSDP). Without this breakthrough, training these massive AI models would be impossible. Let's see this hidden hero of the AI revolution! ⏰ Timestamps: 0:00 - Introduction 1:02 - Sharding Explained 1:16 - Traditional training methods 1:29 - Why FSDP over DDP 1:53 - The GPU Memory Crisis with Example 5:34 - How FSDP solved the Crisis 7:13 - Forward pass with All Gather 8:29 - Backward pass with Reduce scatter 9:44 - Equation to remember 9:58 - Thanks to NCCL 10:10 - Things to remember 🔍 In this video, you'll discover: - Why even the most powerful single GPU can't train modern LLM - Calculations: How much memory GPT-2 actually needs - The clever trick that makes training possible - Real examples with actual numbers and calculations - Why this matters for the future of AI 🧮 Technical deep-dive includes: - Step-by-step memory calculations - All Gather and Reduce Scatter - Multi-GPU orchestration explained simply 📚 Thanks to these articles/papers - PyTorch FSDP Paper: - Meta Engineering Blog: #AI #ChatGPT #MachineLearning #DeepLearning #PyTorch #FSDP #TechSecrets #aitechnology 👉 Contact me: - Instagram: - Email: huttofdevelopers@ - Discord: developershutt#7415 ❤️ If you want to stay ahead in AI technology, hit subscribe and the notification bell! ✍️ Detailed blog post coming soon - stay tuned!

- 5153Просмотров

- 1 год назадОпубликованоDevelopers Hutt

The SECRET Behind ChatGPTs Training That Nobody Talks About | FSDP Explained

Похожее видео

Популярное

красная гадюка 17-20 серия

Universal major 4

Смывайся

Бурное безрассудно 2 часть

ЛЯПИК ЕДЕТ В ОКИДО

Стрекоза и муравей

Финал

Грань правосудия 2

Лихач 4сезон 10-12

Wb effects not scary

Европа плюс ТВ

humiliation

agustin marin i don

опасность

Classic caillou gets grounded on thanksgiving

Василь котович

Nick jr

губка боб бабуся

красный тарантул

Грань правосудия

Грань провосудия 4серия

undefined

Профиссионал

психушка

Universal major 4

Смывайся

Бурное безрассудно 2 часть

ЛЯПИК ЕДЕТ В ОКИДО

Стрекоза и муравей

Финал

Грань правосудия 2

Лихач 4сезон 10-12

Wb effects not scary

Европа плюс ТВ

humiliation

agustin marin i don

опасность

Classic caillou gets grounded on thanksgiving

Василь котович

Nick jr

губка боб бабуся

красный тарантул

Грань правосудия

Грань провосудия 4серия

undefined

Профиссионал

психушка

Новини