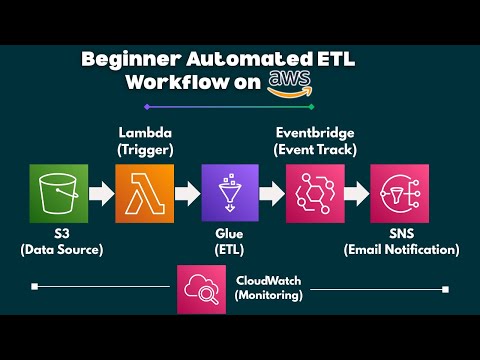

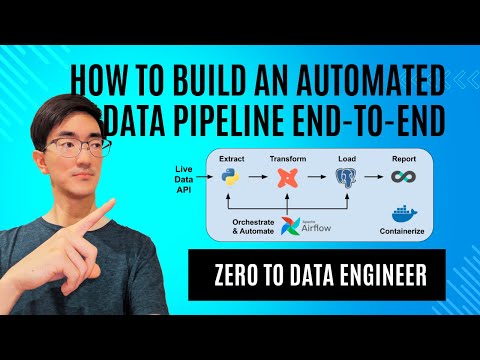

Hey Data Engineering Enthusiasts!! In this video we will be building an ETL data pipeline using Apache Airflow. This pipeline extracts data engineering books from amazon, and stores it in Postgres Database. The pipeline runs on a schedule and pulls data from the website. This video will help you build a basic data pipeline and also get a repository of data engineering books, all at the same time. Github link: Timestamps: 00:00 - Intro 01:00 - Pipeline Design 03:16 - Install Airflow 04:49 - Install PGAdmin 05:44 - Create Books db 06:45 - Create Postgres connection from Airflow 07:27 - Build DAG 09:32 - Define functions 10:43 - Add Tasks 11:26 - Dependencies 11:48 - Manually Trigger DAG 12:14 - Query data on PGAdmin 12:42 - Conclusion Links: Airflow Documentation - Code for PG Admin: """ postgres: ports: - "5432:5432" pgadmin: container_name: pgadmin4_container2 image: dpage/pgadmin4 restart: always environment: PGADMIN_DEFAULT_EMAIL: admin@ PGADMIN_DEFAULT_PASSWORD: root ports: - "5050:80" pgadmin: container_name: pgadmin4_container2 image: dpage/pgadmin4 restart: always environment: PGADMIN_DEFAULT_EMAIL: admin@ PGADMIN_DEFAULT_PASSWORD: root ports: - "5050:80" """ Hope you enjoy this video :) Let me know in the comments about what you think of this video!!

- 72910Просмотров

- 1 год назадОпубликованоSunjana in Data

Airflow for Beginners: Build Amazon books ETL Job in 10 mins

Похожее видео

Популярное

Molest

Лихач 3 сезон 1-4

the jerry springer show

оазис

Малышарики

Bing gets good

томас и его друзья песня

союзмультфильм

Потерянній снайпер2

war thunder tech tree

реклама для детей

ПОТЕРЯННЫЙ СНАЙПЕР 2 серия

Красная гадюка 23 серия

СТРАЖИ ПРАВОСУДИЯ 5

Boo boo song Dana

Обриси

Божественний доктор

ну погоди выпуск 1-20

Красный тарантул 8 серия

Mesis purple

сваты все серии

Backyardigans escape de la aldea magica

Потерянии снайпер 2

Лихач 3 сезон 1-4

the jerry springer show

оазис

Малышарики

Bing gets good

томас и его друзья песня

союзмультфильм

Потерянній снайпер2

war thunder tech tree

реклама для детей

ПОТЕРЯННЫЙ СНАЙПЕР 2 серия

Красная гадюка 23 серия

СТРАЖИ ПРАВОСУДИЯ 5

Boo boo song Dana

Обриси

Божественний доктор

ну погоди выпуск 1-20

Красный тарантул 8 серия

Mesis purple

сваты все серии

Backyardigans escape de la aldea magica

Потерянии снайпер 2

Новини