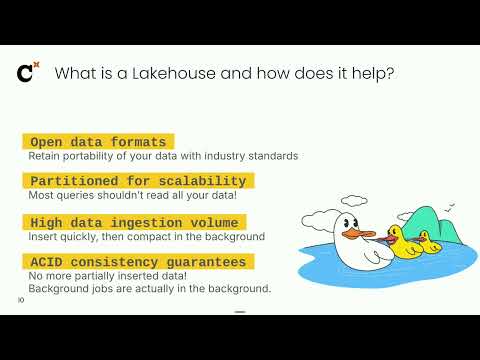

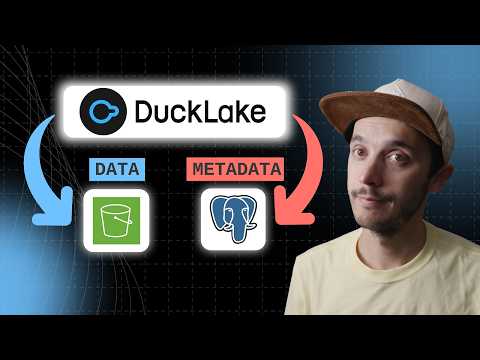

This talk was recorded at NDC Oslo in Oslo, Norway. #ndcoslo #ndcconferences #developer #softwaredeveloper Attend the next NDC conference near you: Subscribe to our YouTube channel and learn every day: / @NDC Follow our Social Media! #database #architecture #bigdata #python #csv #sql In the past decade the industry has seen hundreds of new databases. Most of these newcomers are operational databases, meant for online workloads and being a primary datastore for applications. A handful of new databases are meant for analytical use-cases, mainly large scale big data workloads. Which makes DuckDB an interesting exception, because it's built for workloads that are too big for traditional databases, but not so big that they justify complicated big data tools. It's a lightweight, open-source, analytical database for people with gigabytes or single terabytes of data, not companies with hundreds of terabytes and teams of data engineers. In this session we'll take DuckDB out for a test drive with live demos and discussion of interesting use-cases. We'll see how to use it to quickly run analytical queries on data from multiple data sources. We'll look at how to use DuckDB to transform and manipulate diverse datasets, such as turning a bunch of raw CSV data in S3 into a set of tables in MySQL with a single command. We'll check out its embedded capabilities, by running the database directly inside a Python application. And finally, we'll build a quick-and-dirty Data Lake by using DuckDB, without any complicated big data tools.

- 5486Просмотров

- 4 месяца назадОпубликованоNDC Conferences

Analytics for not-so-big data with DuckDB - David Ostrovsky - NDC Oslo 2025

Похожее видео

Популярное

мальчики

ббурное безрассудство 2

Spongebob

Preview Bing

красная гадюка сезон 2

потерянный сгайпер

Потерянный снайпер 7 серия

Дора

Big cats size comparison 3d

смешарики

Красная гадюка 5-8 серия

Грань правосудия 3

ПЧЁЛКА МАЙЯ

identity v

Дельфин 4

Цена отказа 7-8 серии

Дельфин

Обриси

Потерянный снайпер 2 сезон

Universal g major 7

Aradhana movie

хасбро раша операция

Backyardigans escape de la aldea magica

ббурное безрассудство 2

Spongebob

Preview Bing

красная гадюка сезон 2

потерянный сгайпер

Потерянный снайпер 7 серия

Дора

Big cats size comparison 3d

смешарики

Красная гадюка 5-8 серия

Грань правосудия 3

ПЧЁЛКА МАЙЯ

identity v

Дельфин 4

Цена отказа 7-8 серии

Дельфин

Обриси

Потерянный снайпер 2 сезон

Universal g major 7

Aradhana movie

хасбро раша операция

Backyardigans escape de la aldea magica

Новини