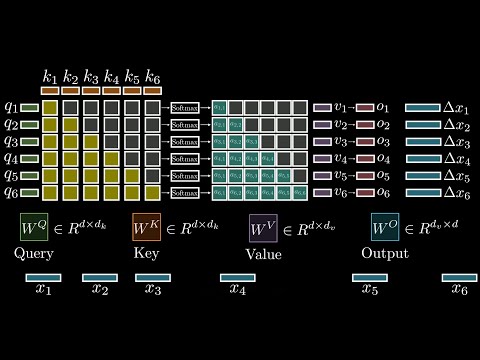

Video 1 of 6 | Mastering LLM Techniques: Inference Optimization. In this episode we break down the two fundamental phases of LLM inference. Prefill ( . context or prompt loading) – the compute-intensive step that ingests the entire prompt and builds the KV cache. Decode – the token-by-token generation phase that is typically memory-bandwidth-bound and far more latency-sensitive. 📚 Source & Credits NVIDIA’s excellent post “Mastering LLM Techniques: Inference Optimization” on the NVIDIA Developer Blog: Special thanks to Kyle Kranen for recommending the post:

- 7041Просмотров

- 6 месяцев назадОпубликованоFaradawn Yang

AI Optimization Lecture 01 - Prefill vs Decode - Mastering LLM Techniques from NVIDIA

Похожее видео

Популярное

смешарики барбарики

Boogeyman vs Stacy Keibler

Molest

Mufasa the lion king shaju

poland warsaw metro ride from bemowo

Грань правосудия 4 сезон

макс и катя новогодний

4 серия

Грань правосудия фильм 4

jarmies

klaskyklaskyklaskyklasky loud super many

Гранд правосудия 4

Стражи правосудия 3 часть

Rosie Misbehaves on the Tokyo

карусель

чаггингтон реклама

agustin marin low voice i killed wi

ну погоди 17 выпуск

Секс и дзен 1

Потеринний снайпер.3серия

барбоскины обзор диск

лёлик и барбарики диск

Law and order criminal intent season 5 intro

Жена чиновника 3 часть

детский сад выпускной

Boogeyman vs Stacy Keibler

Molest

Mufasa the lion king shaju

poland warsaw metro ride from bemowo

Грань правосудия 4 сезон

макс и катя новогодний

4 серия

Грань правосудия фильм 4

jarmies

klaskyklaskyklaskyklasky loud super many

Гранд правосудия 4

Стражи правосудия 3 часть

Rosie Misbehaves on the Tokyo

карусель

чаггингтон реклама

agustin marin low voice i killed wi

ну погоди 17 выпуск

Секс и дзен 1

Потеринний снайпер.3серия

барбоскины обзор диск

лёлик и барбарики диск

Law and order criminal intent season 5 intro

Жена чиновника 3 часть

детский сад выпускной

Новини